Your message has been sent.

We’ll process your request and contact you back as soon as possible.

The form has been successfully submitted.

Please find further information in your mailbox.

Apache Airflow is a robust, open-source, Python-written service used by Data Engineers to orchestrate workflows and pipelines by highlighting pipelines’ dependencies, code, logs, trigger tasks, progress, and success status to troubleshoot problems when needed.

If the task completes or fails, this flexible, scalable, and compatible with external data solution is capable of sending alerts and messages via Slack or email. Apache does not impose restrictions on how the workflow should look and has a user-friendly interface to track and rerun jobs.

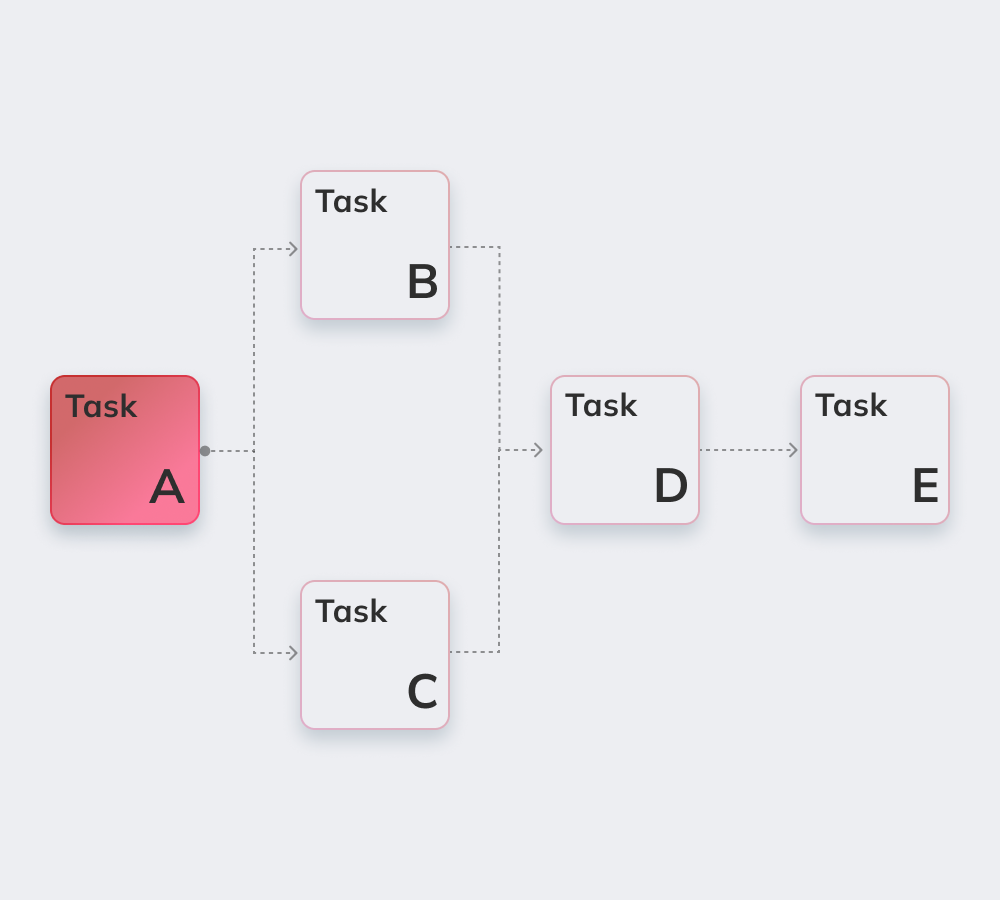

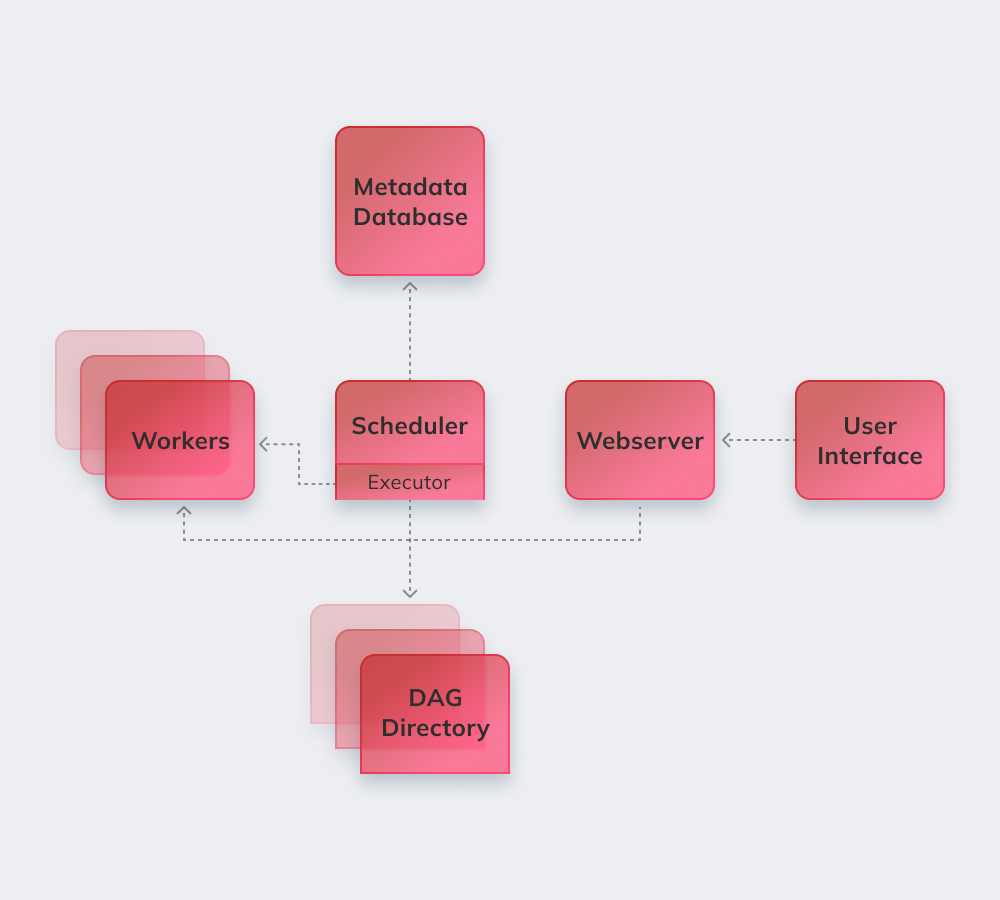

Finally, let’s demonstrate how Apache works on a simple example. Firstly, Apache revises all the DAGs in the background. Urgent tasks that need to be completed get the mark SCHEDULED in the database. The Scheduler retrieves tasks from the database and distributes them to Executors. After that, the tasks receive QUEUED status, and once workers start executing them, RUNNING status to the job is assigned. When the task is completed, the worker indicates it as finished/failed depending on the final result’s success, and the Scheduler updates the status in the database.

Below, we list the most exciting features of Apache Airflow.

Basic Python knowledge is the only requirement to build solutions on the platform.

The service is free, with many active users worldwide.

One can seamlessly work with complementary products from Microsoft Azure, Google Cloud Platform, Amazon AWS, etc.

You can track the status of scheduled and ongoing tasks in real-time.

Learn about the basic Apache Airflow principles below.

Airflow pipelines are configured as Python code to make pipelines’ generation dynamic.

Users can create defined operators, executors, and libraries suitable for their specific business environment.

The service does not crash since it has a modular architecture and can be scaled to infinity.

They include automation, community, visualization of business processes, as well as proper monitoring and control. We will briefly go through all of them.

There are more than 1000 contributors to the open-source service. They regularly participate in its upgrade.

Apache is a perfect tool to generate a “bigger picture” of one’s workflow management system.

Automation makes Data Engineers’ jobs smoother and enhances the overall performance.

The built-in alerts and notifications system allows setting responsibilities and implementing corrections.

Plenty of data engineering platforms empowered by Airflow utilize the basic logic and benefits of the service and add new features to solve specific challenges. They can be called Apache Airflow alternatives since they have pretty similar functionality:

Amazon Managed Workflows for Apache Airflow – a managed Airflow workflow orchestration service to set up and operate data pipelines on Amazon Web Services (AWS).

Apache is a powerful tool for data engineering compatible with third-party services and platforms. Migration to Airflow is smooth and trouble-free regardless of the size and specifications of the business.

Innowise delivers profound Apache expertise of any complexity and scope. Apache Airflow is a perfect choice to bring order if a client suffers from poor communication between departments and searches for more transparency in workflows.

Our skilled developers will implement a highly customized modular system that improves operation with big data and makes Airflow processes fully managed and adaptable to your business environment’s peculiarities.

Rate this article:

4.8/5 (45 reviews)

Your message has been sent.

We’ll process your request and contact you back as soon as possible.

By signing up you agree to our Privacy Policy, including the use of cookies and transfer of your personal information.