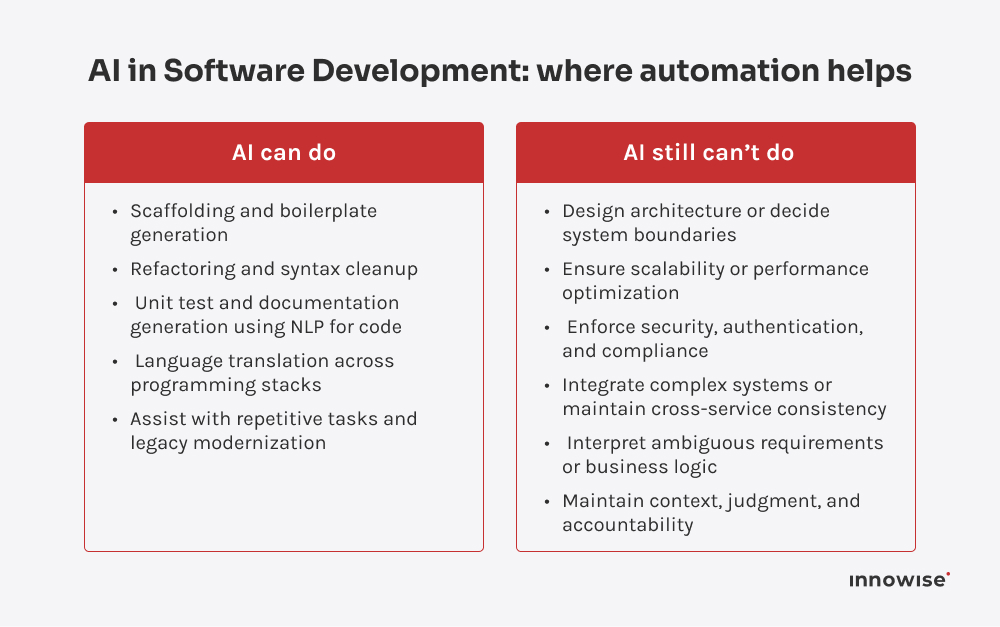

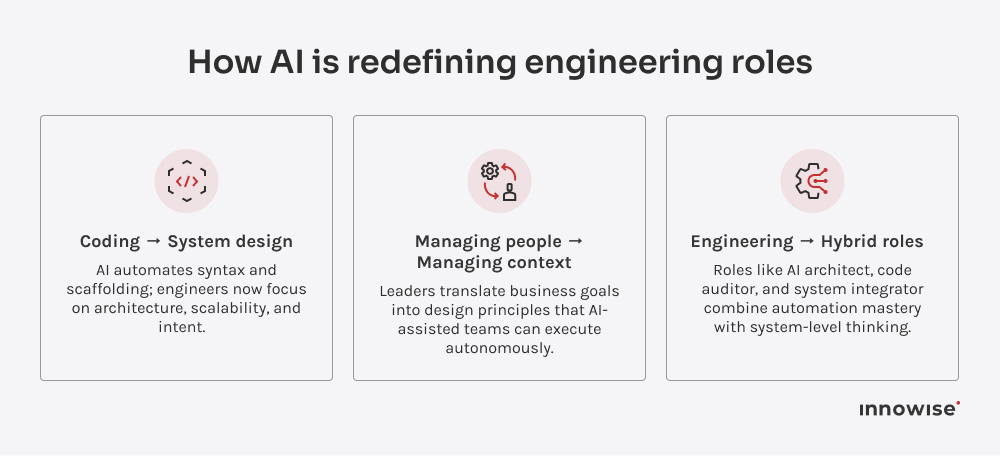

Not entirely. While AI can generate large portions of functional code, it still lacks contextual understanding, domain reasoning, and accountability. The idea that AI will replace programmers misunderstands what engineers actually do: design systems, validate logic, and align technology with business needs. AI speeds up typing, not thinking. Skilled developers who guide automation and ensure architectural clarity will remain indispensable.

Your message has been sent.

We’ll process your request and contact you back as soon as possible.