Viestisi on lähetetty.

Käsittelemme pyyntösi ja otamme sinuun yhteyttä mahdollisimman pian.

Lomake on lähetetty onnistuneesti.

Lisätietoja on postilaatikossasi.

As reports from across the industry indicate, there’s now a growing specialized sector for engineers who focus on correcting AI-generated code errors.

The pattern has become remarkably consistent. Companies turn to ChatGPT to generate migration scripts, integrations, or entire features, hoping to save time and cut costs. After all, the technology appears fast and accessible.

Then the systems fail.

And they call us.

Recently, we’ve been getting more and more of these requests. Not to ship a new product, but to untangle whatever mess was left behind after someone trusted a language model with their production code.

At this point, it’s starting to look like its own niche industry. Fixing AI-generated bugs is now a billable service. And in some cases, a very expensive one.

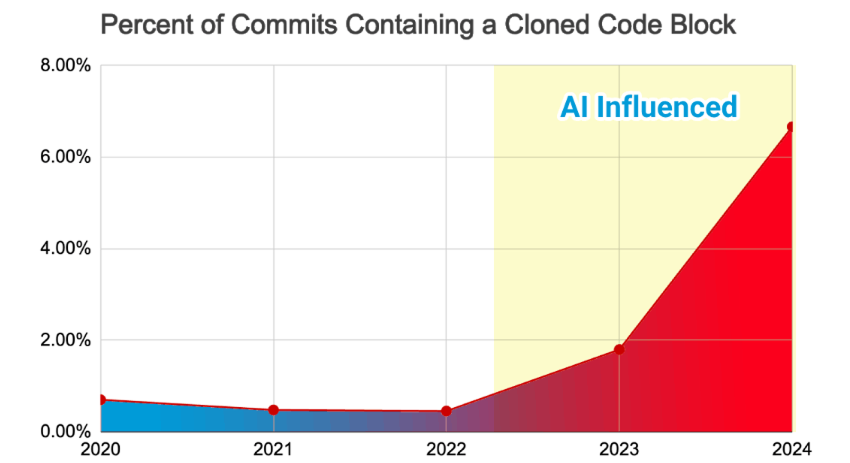

GitClear’s 2024 report confirms what we’ve seen with clients: AI coding tools are speeding up delivery, but also fueling duplication, reducing reuse, and inflating long-term maintenance costs.

Let’s be clear, however, we’re not “against AI.” We use it too. And it’s helpful in the right context, with the right guardrails. But what frustrates me about the overreliance on AI and its widespread implications — and probably you too — is the magical thinking. The idea that a language model can replace real engineering work.

It can’t. And as the saying goes, the proof is in the pudding. When companies pretend otherwise, they end up paying someone like us to clean it up.

So, what does one of these clean-up jobs look like? Here’s what the AI-afficionados aren’t telling you when it comes to time lost and money wasted.

The message usually comes in like this:

“Hey, can you take a look at a microservice we built? We used ChatGPT to generate the first version. We pushed it to staging, and now our RabbitMQ queue is completely flooded.”

But here’s the thing: the symptoms show up much later. Sometimes days later. And when they do, it’s rarely obvious that the root cause was AI-generated code. It just looks like… something’s off.

After a dozen of these cases, patterns start to emerge:

And of course, when everything collapses, the AI doesn’t leave you a comment saying, “By the way, I’m guessing here.”

That part’s on you.

This one came from a fast-growing fintech company.

They were rolling out a new version of their customer data model, splitting one large JSONB field in Postgres into multiple normalized tables. Pretty standard stuff. But with tight deadlines and not enough hands, one of the developers decided to “speed things up” by asking ChatGPT to generate a migration script.

It looked good on the surface. The script parsed the JSON, extracted contact info, and inserted it into a new user_contacts table.

So they ran it.

No dry run. No backup. Straight into staging, which, as it turns out, was sharing data with production through a replica.

A few hours later, customer support started getting emails. Users weren’t receiving payment notifications. Others had missing phone numbers in their profiles. That’s when they called us.

NULL values or missing keys inside the JSON structure.ON CONFLICT DO NOTHING, so any failed inserts were silently ignored.This one came from a legal tech startup building a document management platform for law firms. One of their core features was integrating with a government e-notification service — a third-party REST API with OAuth 2.0 and strict rate limiting: 50 requests per minute, no exceptions.

Instead of assigning the integration to an experienced backend dev, someone on the team decided to “prototype it” using ChatGPT. They dropped in the OpenAPI spec, asked for a Python client, and got a clean-looking script with requests, retry logic using tenacity, and token refresh.

Looked solid on paper. So they shipped it.

Here’s what actually happened:

X-RateLimit-Remaining tai Retry-After headers. It just kept sending requests blindly.httpx.AsyncClient, implemented a semaphore-based throttle, added exponential backoff with jitter, and properly handled Retry-After and rate-limit headers.Two engineers, spread over two and a half days. Cost to client: around $3,900.

The bigger problem is that their largest customer — a law firm with time-sensitive filings — missed two court submission windows due to the outage. The client had to do damage control and offer a discount to keep the account.

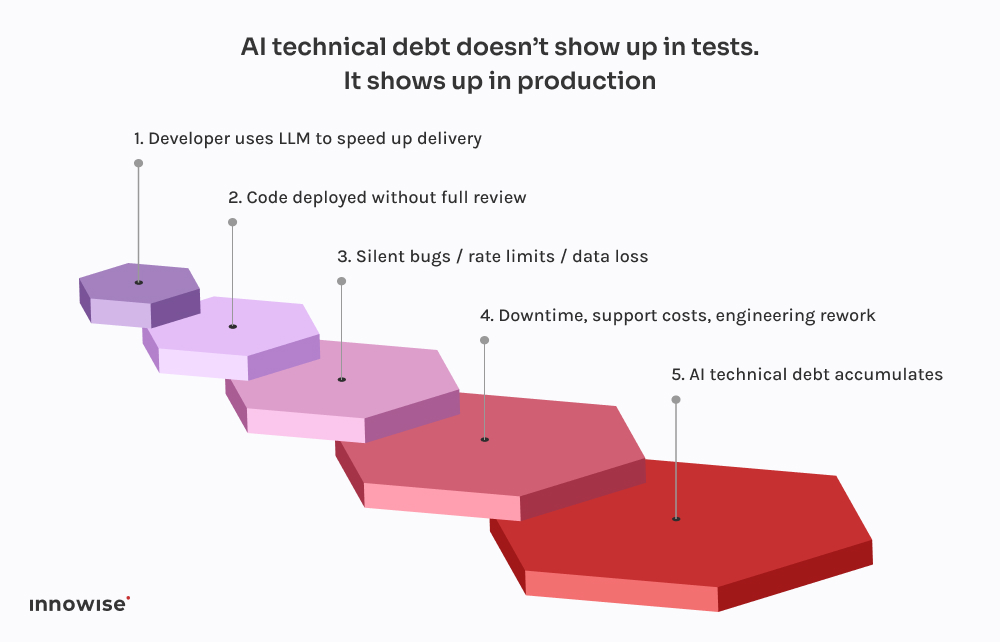

All because a language model didn’t understand the difference between “working code” and “production-ready code.” And just like that, another layer of AI technical debt was quietly added to the stack.

What’s worse is that the code looks correct. It’s syntactically clean. It passes linters. It might even be covered by a basic test. But it’s missing the one thing that actually matters: context.

That’s why these bugs don’t show up right away. They wait for Friday night deployments, for high-traffic windows, for rare edge cases. That’s the nature of AI technical debt – it’s invisible until it breaks something critical.

What they’re not good at is design. Or context. Or safe defaults.

That’s why we’ve built our workflows to treat LLM output like suggestions, not source of truth. Here’s what that looks like in practice:

Used right, it’s a time-saver. Used blindly, it’s a time bomb.

Let them help with repetitive code. Let them propose solutions. But don’t trust them with critical decisions. Any code generated by AI should be reviewed by a senior engineer, no exceptions.

Whether it’s commit tags, metadata, or comments in the code, make it clear which parts came from AI. That makes it easier to audit, debug, and understand the risk profile later on.

Decide as a team where it’s acceptable to use LLMs and where it’s not. Boilerplate? Sure. Auth flows? Maybe. Transactional systems? Absolutely not without review. Make the policy explicit and part of your engineering standards.

If you’re letting AI-generated code touch production, you need to assume something will eventually break. Add synthetic checks. Rate-limit monitors. Dependency tracking. Make the invisible visible, especially when the original author isn’t human.

The biggest AI-driven failures we’ve seen didn’t come from “bad” code. They came from silent errors — missing data, broken queues, retry storms — that went undetected for hours. Invest in observability, fallback logic, and rollbacks. Especially if you’re letting ChatGPT write migrations.

In short, AI can save your team time, but it can’t take responsibility.

That’s still a human job.

Viestisi on lähetetty.

Käsittelemme pyyntösi ja otamme sinuun yhteyttä mahdollisimman pian.

Rekisteröitymällä hyväksyt Tietosuojakäytäntö, mukaan lukien evästeiden käyttö ja henkilötietojesi siirto.